逻辑回归–Logistic Regression

重点考察 梯度下降 证明,推导得出 交叉熵(损失函数)

整篇感觉都是 99%

开门见山

逻辑回归交叉熵函数

J(θ)=−m1i=1∑my(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))

以及 J(θ) 对参数 $ \theta$ 的偏导数(用于诸如梯度下降法等优化算法的参数更新),如下:

∂θj∂J(θ)=m1i=1∑m(hθ(x(i))−y(i))xj(i)

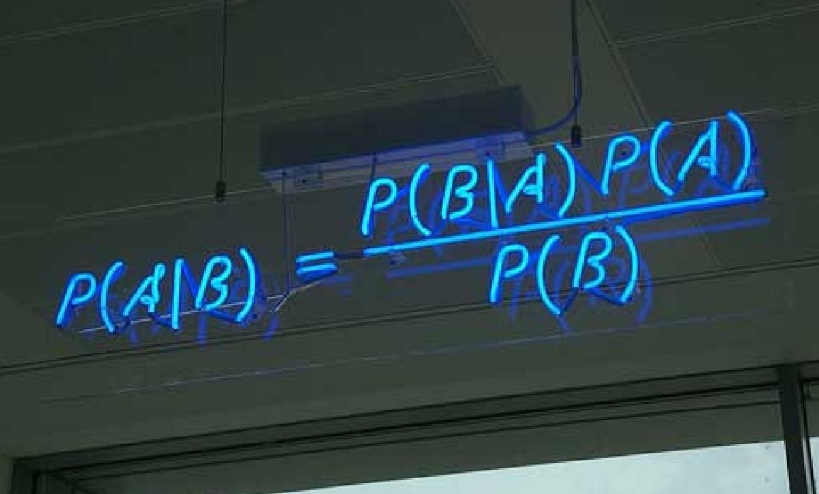

交叉熵函数(Logistic Regression 损失函数)

假设一共有 m 组已知样本 ( Batchsize=m),(x(i),y(i)) 表示第 i 组数据及其对应的类别标记。

其中 x(i)=(1,x1(i),x2(i),…,xp(i))T 为 p+1 维向量 (考虑偏置项), y(i) 则为表示类别的一个数:

- logistic 回归 (是非问题,二分类) 中, y(i) 取0或者1 ;

- softmax 回归 (多分类问题) 中, y(i) 取 1,2…k 中的一个表示类别标号的一个数(假设共有 k 类)。

这里只讨论二分类问题

sigmoid函数

对于输入样本数据 x(i)=(1,x1(i),x2(i),…,xp(i))T, 模型的参数为 θ=(θ0,θ1,θ2,…,θp)T , 因此有 θTx(i):=θ0+θ1x1(i)+⋯+θpxp(i) .

二元问题中常用 sigmoid 作为假设函数(hypothesis function),定义为:

hθ(x(i))=1+e−θTx(i)1.

因为 Logistic 回归问题就是0/1的二分类问题,因此有

P(y^(i)=1∣x(i);θ)=hθ(x(i))P(y^(i)=0∣x(i);θ)=1−hθ(x(i))

交叉熵推导

现在,不考虑“熵”的概念,根据下面的说明,从简单直观角度理解,就可以得到我们想要的损失函数:将概率取对数,其单调性不变,有

logP(y^(i)=1∣x(i);θ)=loghθ(x(i))=log1+e−θTx(i)1logP(y^(i)=0∣x(i);θ)=log(1−hθ(x(i)))=log1+e−θTx(i)e−θTx(i)

那么对于第 i 组样本,假设函数表征正确的组合对数概率为:

I{y(i)=1}logP(y^(i)=1∣x(i);θ)+I{y(i)=0}logP(y^(i)=0∣x(i);θ)=y(i)logP(y^(i)=1∣x(i);θ)+(1−y(i))logP(y^(i)=0∣x(i);θ)=y(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))

其中, I{y(i)=1}和I{y(i)=0} 为示性函数 (indicative function),简单理解为 {} 内条件成立时, 取1 , 否则取0, 这里不㸷言。

那么对于一共 m 组样本, 我们 就可以得到模型对于整体训练样本的表现能力:

i=1∑my(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))

由以上表征正确的概率含义可知, 我们希望其值越大, 模型对数据的表达能力越好。而我们在参数更新或衡量模型优劣时是需要一个能充分反映模型表现误差的损失函数(Loss function)或者代价函数(Cost function)的, 而且我们希望损失函数越小越好。由这两个矛盾, 那么我们不妨领代价函数为上述组合对数概率的 相反数:

J(θ)=−m1i=1∑my(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))

上式即为大名鼎鼎的 交叉熵损失函数。

对于熵的概念,其实可以理解一下信息熵 E[−logpi]=−∑i=1mpilogpi

交叉熵(损失函数)求导

又臭又长

元素表示形式

交叉熵损失函数为:

J(θ)=−m1i=1∑my(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))

其中:

loghθ(x(i))=log1+e−θTx(i)1=−log(1+e−θTx(i)),log(1−hθ(x(i)))=log(1−1+e−θTx(i)1)=log(1+e−θTx(i)e−θTx(i))=log(e−θTx(i))−log(1+e−θTx(i))=−θTx(i)−log(1+e−θTx(i))(13.

由此可得:

J(θ)=−m1i=1∑m[−y(i)(log(1+e−θTx(i)))+(1−y(i))(−θTx(i)−log(1+e−θTx(i)))]=−m1i=1∑m[y(i)θTx(i)−θTx(i)−log(1+e−θTx(i))]=−m1i=1∑m[y(i)θTx(i)−logeθTx(i)−log(1+e−θTx(i))](3)=−m1i=1∑m[y(i)θTx(i)−(logeθTx(i)+log(1+e−θTx(i)))](2)=−m1i=1∑m[y(i)θTx(i)−log(1+eθTx(i))]

计算 J(θ) 对第 j 个参数分量 θj 求偏导:

∂θj∂J(θ)=∂θj∂(m1i=1∑m[log(1+eθTx(i))−y(i)θTx(i)])=m1i=1∑m[∂θj∂log(1+eθTx(i))−∂θj∂(y(i)θTx(i))]=m1i=1∑m(1+eθTx(i)xj(i)eθTx(i)−y(i)xj(i))=m1i=1∑m(hθ(x(i))−y(i))xj(i)

这就是交叉熵对参数的导数

∂θj∂J(θ)=m1i=1∑m(hθ(x(i))−y(i))xj(i)

向量形式

只是写法不同,过程基本一致,向量形式感觉比较清晰。

忽略交叉熵前面的参数 m1:

J(θ)=−[yTloghθ(x)+(1−yT)log(1−hθ(x))]

将 hθ(x)=1+e−θTx1 带入, 得到:

J(θ)=−[yTlog1+e−θTx1+(1−yT)log1+e−θTxe−θTx]=−[−yTlog(1+e−θTx)+(1−yT)loge−θTx−(1−yT)log(1+e−θTx)]=−[(1−yT)loge−θTx−log(1+e−θTx)]=−[(1−yT)(−θTx)−log(1+e−θTx)]

再对 θ 求导, 前面的负号直接消掉了,

∂θj∂J(θ)=−∂θj∂[(1−yT)(−θTx)−log(1+e−θTx)]=(1−yT)x−1+e−θTxe−θTxx=(1+e−θTx1−yT)x=(hθ(x)−yT)x

梯度下降的参数更新

初始化参数 θ 后,重复:

θj:=θj−α∂θj∂J(θ)

以上公式就为参数更新公式,结合之前的交叉熵推到公式可得:

θj:=θj−αm1i=1∑m[y(i)−hθ(x(i))]xj(i)

其中 α 为学习率